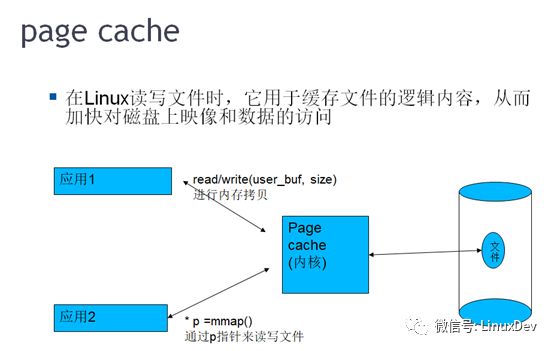

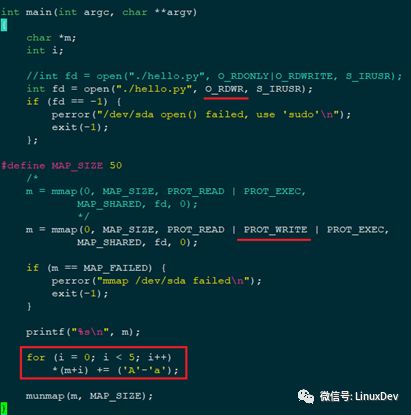

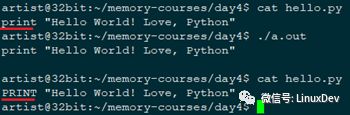

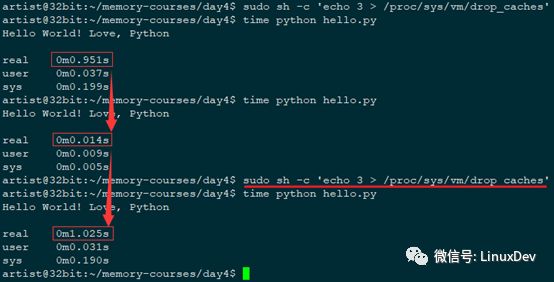

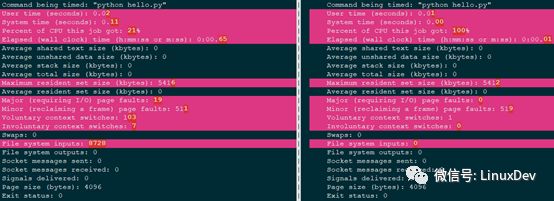

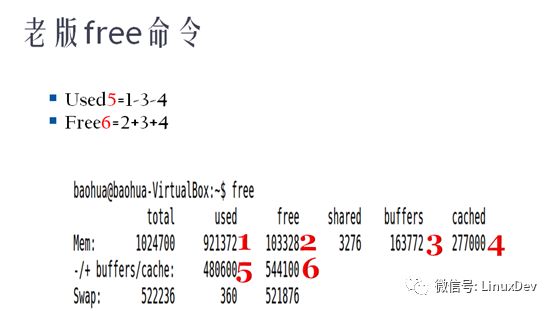

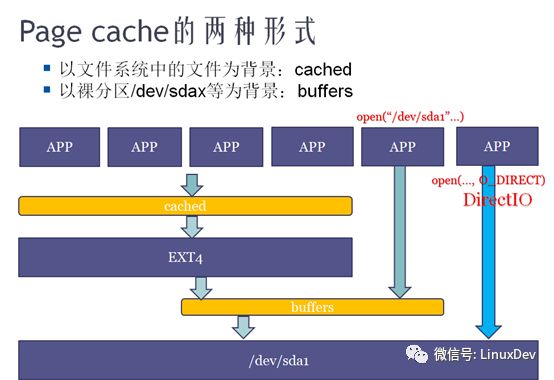

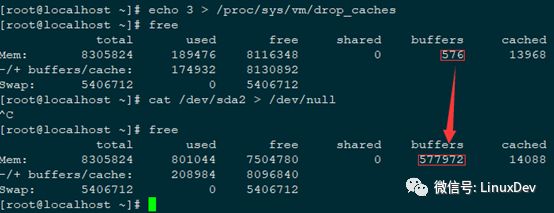

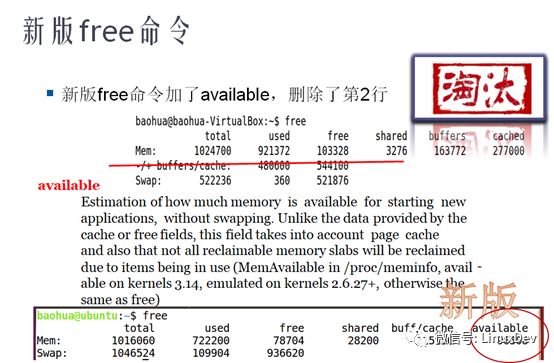

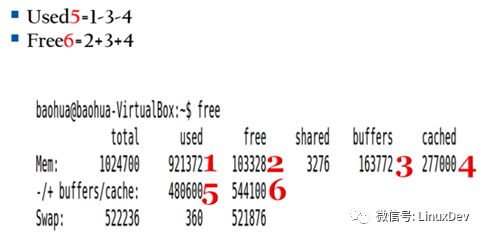

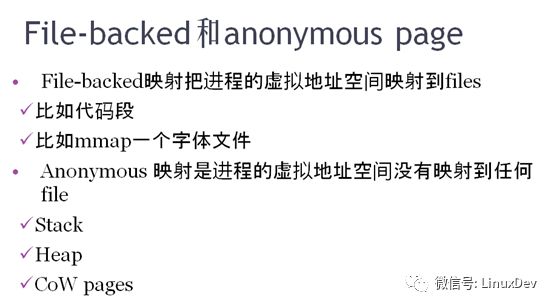

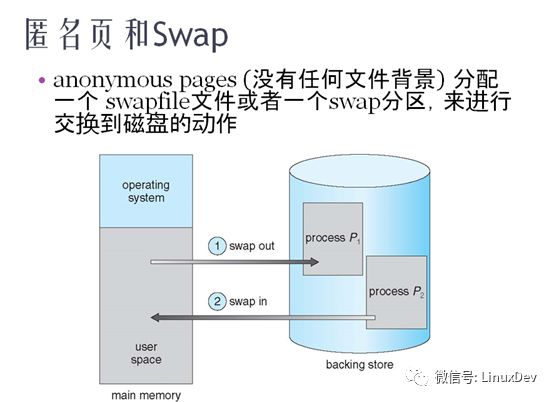

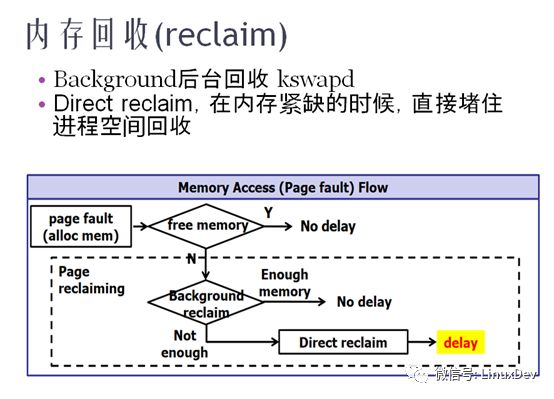

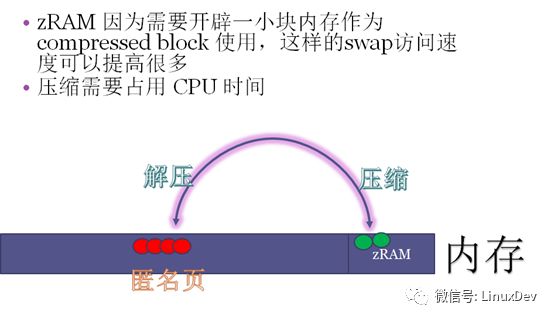

Linux always allocates memory to the application in a Lazy way, including heaps and stacks (the deeper the function calls, the more stacks are used, the page faults eventually result in stacks), code segments, and data segments. So, do these segments that have already reached memory still occupy memory? 1. page cache There are two main ways to read and write files under Linux: Read/write Call read read file, Linux kernel will apply for a page cache, and then read the file to the page cache, and then copy the kernel space page cache to user space buf. Call write write file, the user space buf copy to the kernel space page cache. Mmap Mmap can avoid buf copying from user space to kernel space. Map the file directly to a virtual address pointer, which points to the kernel's requested page cache. The kernel knows the correspondence between the page cache and the files on the hard disk. Reading and writing files using mmap Note: read and write permissions need to correspond, otherwise trigger page fault. Compile and execute: Mmap seems to be a virtual address corresponding to a file (you can directly access the file with a pointer), essentially mapping the process's virtual address space to DRAM (the kernel requests memory from the area to do page cache), and this page cache corresponds to A file on the disk, and the Linux kernel maintains the exchange relationship between the page cache and the files on the disk. See the figure below: The page cache can be seen as a cache of memory for the disk. When an application writes a file, it actually writes the content to the page cache. Using sync can really write to the file. The ELF executable program header records the position of the code segment. The essence of the code segment maps the code segment in the ELF file directly to a virtual address and the permission is R+X. The page cache can greatly improve the overall system performance. For example, if process A reads a file, kernel space will apply a page cache corresponding to this file and record the corresponding relationship. Process B reads the same file again and will directly hit the previous page cache, and the read/write speed is significantly improved. Note, however, that the page cache will be replaced based on the LRU algorithm (least recently used). Demo: The effect of page cache on program execution time The first time there was a lot of hard disk io operations; many of the second time python hits in memory, and the speed increased significantly. Compare again with the ime -v command: Note: i. swap: Verb: swapping, jolting memory and disk Name: swap partition Ii. The cache can be forcibly released by /proc/sys/vm/drop_caches, write 1 to release the page cache, 2 release dentries and inodes, 3 release both. 2. Detailed explanation of the free command In the above figure, buffers and cached are caches of the file system. There is no essential difference. The only difference is that the background is different: i. When the file system (ext4, xfs, etc.) to access the file system file, such as mount / dev/sda1 / mnt, / mnt directory will have a lot of files, access to such files generated by the cache Corresponds to the cached column displayed by the free command. Ii. When directly accessing /dev/sda1, if the user program directly opens open ("dev/sda1...") or executes the dd command, and the file system itself accesses the raw partition, the generated cache corresponds to the buffers column displayed by the free command. Refer to the figure below: Demonstration: Reading a Hard Partition on a Hard Disk Causes the Free Command to Show Content Changes After the Linux kernel version 3.14, the new free command has been used, as shown below: The meaning of -/+buffers/cache in the old version is as follows: The new version of free more available, that is, assessing how much memory is available for the application to use. 3. File-backed pages and anonymous pages The page cache, like the internal CPU cache, can be replaced. Pages with file backgrounds can be swapped to disk. EG. Start firefox, run a oom program, compare firefox smaps files before and after. It can be seen that in the case of memory shortage in firefox, the code segment and the mmap font file are all replaced without memory. So how do anonymous pages without file backgrounds are exchanged? Is it resident in memory? See the figure below: Swaps are required for pages with file backgrounds and anonymous pages. Pages with file backgrounds are exchanged with their own file backgrounds, and anonymous pages are swapped with swap partitions and swapfiles. Even if CONFIG_SWAP is closed when the kernel is compiled (just the exchange of anonymous pages is turned off), the thread of kswapd in the Linux kernel will still swap pages with file background. Linux has three water levels: min, low, high. Once the memory reaches a low level, the background is automatically recovered until a high water level is recovered. When the memory reaches the min level, the process is blocked directly for recovery. Both anonymous pages and pages with file backgrounds may be reclaimed. When the value of /proc/sys/vm/swappiness is large, the anonymous page tends to be reclaimed. When the value of swappiness is relatively small, pages with file backgrounds tend to be retrieved. The recovery algorithm is all LRU. Note: The data segment is special. If there is no write, it has a file background, but after it is written, it becomes an anonymous page. Virtual memory in Windows is equivalent to Linux's swapfile. 4. Page Recycling and LRU As shown above, page 1 is the least active when it is run into column 4. In the fifth column, the first page was stepped again, and the second page became the least active. In the sixth column, the second page is stepped once, and the third page becomes the most inactive. Therefore, in the seventh column, since a new page 5 is accessed, 3 is replaced. 5. swap and zRAM Embedded systems are limited by flash and rarely use swap partitions, typically swapoff. So the embedded system introduces zRAM technology. zRAM emulates a block of memory directly into a hard disk partition and uses it as a swap partition. This partition comes with a transparent compression function. When the anonymous page is written to the zRAM partition, the Linux kernel automatically causes the CPU to compress the anonymous page. Next, when the application re-executes the anonymous page, because the page has been swapped into zRAM, there is no hit in the memory, the page table does not hit, so the page fault occurs again when the memory is accessed again. Linux will transparently extract the anonymous page from the zRAM partition and return it to memory. The zRAM feature is to do swap partitioning with memory, transparent pressure (two pages of anonymous pages may be compressed into one page), transparent solution (one page decompresses into two pages), this is equivalent to the expansion of memory, but it will be more depleting CPU. A manual pulse generator (MPG) is a device normally associated with computer numerically controlled machinery or other devices involved in positioning. It usually consists of a rotating knob that generates electrical pulses that are sent to an equipment controller. The controller will then move the piece of equipment a predetermined distance for each pulse. Manual Pulse Generator,Handwheel MPG CNC,Electric Pulse Generator,Signal Pulse Generator Jilin Lander Intelligent Technology Co., Ltd , https://www.jilinlandermotor.com

The CNC handheld controller MPG Pendant with x1, x10, x100 selectable. It is equipped with our popular machined MPG unit, 4,5,6 axis and scale selector, emergency stop and reset button.